How to do data-driven testing using external data files?

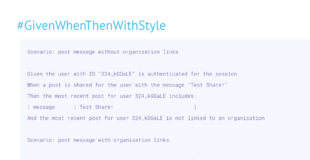

The next challenge in the Given-When-Then series is a common problem in situations when developing APIs or modules that connect to external systems.

How can I deal with hard to read example data (e.g. Guids, JSON Messages, URLs, …)?

Here is the challenge

Scenarios that suffer from this problem are usually driven by developers, and in rare cases by business users familiar with a technical message format (for example, SWIFT or the ISO 20022 standard). To make things more interesting, let’s use the business-driven case. Note that many Given/When/Then tools allow you to specify a parameter on multiple lines by enclosing it in three quote marks on both ends, and the following sample uses this trick to make the scenario slightly easier to format on a narrow screen:

Scenario: transactions in payment accepted

Given an account EE382200221020145685 has balance of 100.00 EUR

When the system receives the payment message

"""

MSGID000002

2020-07-28T10:33:56

1

22.00

Tom Nook

PaymentInformationId

TRF

1

22.00

SEPA

2020-07-28

Tom Nook

AT611904300234573201

ABAGATWWXXX

SLEV

E2E-ID-1

22.00

HABAEE2X

Beneficiary1

EE382200221020145685

RemittanceInformation1

"""

Then the account EE382200221020145685 balance should be 122.00 EUR

And the payment status report generated should be

"""

STAT20200728103356001

2020-07-28T10:33:56

MSGID000002

pain.001

ACTC

"""Now this is obviously horrible to read and maintain, but remember that business users working in SEPA processing are intimately familiar with this format, so they will happily provide examples or discuss individual fields with developers. If you really want to understand the individual fields in this message, check out the ISO 20022 message definitions (look for payments initiation), or perhaps one of the ISO 20022 payments guides published by local banks – here is an example from ABN AMRO.

There are several issues we need to address with specifications of this type:

1) Regardless of the format being business-readable or not, the verbosity of this structure makes it very difficult to understand what’s actually happening. And this is just a single scenario, showing an accepted message with a single payment (the group status GrpSts value ACTC means accepted fully). We want to show examples with multiple payments in the same message (multiple CdtTrfTxInf blocks, and NbOfTxs and CtrlSum updated to reflect the whole group), combined with examples for response statuses PART (accepted partially) or RJCT (rejected).

2) The messages have fields that change automatically, such as generated identifiers and timestamps, which might cause problems for repeated test runs (identifiers might be rejected as duplicates, or timestamps might become too old in a few months and get rejected as invalid).

3) The tests are too broad and brittle. They are testing too many things, such as field mappings in payment request and response, customer balance updates, conditions for message acceptance or rejection and so on. The tests might start failing for too many reasons, and it will be difficult to pinpoint problems.

Your challenge is to propose ways to clean up this mess, while keeping solid test coverage, and being able to introduce further examples easily.

Solving: How to deal with external data formats?

The challenge was dealing with external data in Given-When-Then scenarios, especially when the structure makes information hard to read. Typical cases are JSON and XML formats, often containing auto-generated unique identifiers or long URLs.

For a detailed description of the problem, and the example scenario containing ISO20022 payment messages, check out the original post. This article contains an analysis of the community responses, and some additional tips on dealing with similar problems.

Apply the Single Responsibility Principle

Scenarios containing structured data formats, such as JSON or XML, usually try to demonstrate several aspects of a system at the same time. The payments example from the challenge article is trying to answer the following six questions:

- how will incoming messages be structured? (ISO20222 XML)

- how should SEPA credit transfer (

CdtTrfTxInf) transactions affect account balance? - how to translate between a transaction outcome and a payment status report? (value of

GrpSts) - how do outgoing payment status reports relate to incoming messages? (contents of

OrgnlGrpInfAndSts) - how to format outgoing payment status reports? (ISO20222 XML, auto-assigned

GrpHdrvalues) - how a payment message flows through the system? (overall integration)

The original scenario depends on so many system aspects that it will frequently break and require attention. At the same time, it’s too complicated to understand, so it will be very difficult to change. To add insult to injury, for all that complexity, it explains just a tiny piece of each of the six topics. There are many more elements in the ISO20222 XML, many other ways SEPA credit transfers can be executed depending on the message contents, various other statuses that transactions can generate, and additional payment flows through the system. We would need hundreds of similar scenarios to fully cover the payment processing with tests.

The typical way to solve these kinds of problems in software design is to apply the Single Responsibility Principle (SRP), and this works equally well when writing Given-When-Then scenarios. SRP says that a single module should only have a single responsibility, and that particular responsibility should not be shared with any other modules.

SRP improves understanding

When a feature file or scenario fully responsible for single topic, and just that topic, it is easier to understand compared to a mix of files sharing topics. In addition, self-contained topics are very useful as documentation. If someone wants to know how SEPA credit transfers work, they only need to read a single file, and do not have to worry about missing something important from hundreds of other files.

SRP reduces brittleness

When scenarios depend on many system aspects, feature files usually suffer from shotgun surgery. Introducing a small change in functionality leads to changes in many feature files.

With clear responsibility separation, each scenario only has a single reason to change. Changing XML parser functionality should not have any effect on transaction settlement feature files. Applying SRP to Given-When-Then feature files and scenarios makes it relatively easy to contain the effects of changes, and significantly reduces the cost of maintenance.

SRP facilitates evolutionary design

In addition to containing unexpected changes, applying SRP also facilitates intended change. If we want to introduce new rules for transaction settlement, and only one feature file needs to change, this will be a lot easier to implement than updating dozens of related files. We shouldn’t need to search through specifications for incoming message parsing or the scenarios for payment status reports, just to make sure they are not using the old settlement rules in some weird way.

Applying SRP to Given-When-Then when you can influence software design

Specification by Example works best if you can use collaborative analysis to influence software design. In such cases, using the Single Responsibility Principle for feature files directly helps to apply SRP to the underlying system as well. We can notice various concerns at the stage when we’re still discussing examples, which lets us experiment with different ways of slicing the problem. This responsibility separation will likely translate to system design, if we can influence it.

Exploring the domain model along with examples can be very beneficial at this stage, but you don’t have to fully design the system to get value from responsibility separation. You can start with an informal split, and refine it later. Ken Pugh wrote a detailed response with nice example scenarios demonstrating this approach. I will show just a small extract here, so make sure to read his post for more details.

Ken suggested creating a Payment model and using that to process transactions internally, then splitting the overall flow into three parts:

- Convert Payment XML to Payment Model

- Use data in Payment Model to apply payment and receive a status return

- Convert status return to Status XML

The payment model can use whatever names are meaningful for the business context. The scenario showing how to settle credit transfers then does not need to involve any XML processing at all. Here’s an example from Ken’s response:

Given account is

|Account | Balance |

|EE382200221020145685 |100.00 EUR|

When payment message contains

|CdtrAcct-Id-IBAN | Amt-InstdAmt | GrpHdr-CreDtTm |

|EE382200221020145685| EUR 22.00 | 2020-07-28T10:33:56 |

Then account is now

|Account |Balance |

|EE382200221020145685 |122.00 EUR|

And payment status report includes

|GrpHdr-CreDtTm |

|2020-07-28T10:33:56 |Ken proposes using completely separate scenarios showing how to transform incoming XML into the Payment Model, and how to transform the outcome into an XML message. Those are all unique responsibilities.

Splitting the responsibilities in this way, even informally, immediately provides better understanding and more flexibility. For example, we have an option of moving the XML parsing and serialization examples into a developer-oriented testing tool, such as JUnit or NUnit. This solves lots of problems with external data formats easily. If the business users aren’t really working with the complex external format, just hide it in step definitions. Fin Kingma explains this further:

Of course, some systems might require tests that focus more on the structure of the messages, but in my opinion Gherkin isn’t made for that. Gherkin is intended for functional tests, which can always be phrased in simple sentences (which is often the most difficult part).

However, if the business users are genuinely concerned with external formatting, which they might be in case of payments messages, we could describe the formatting in feature files. This is where the three-quotes trick comes handy – it allows us to include multi-line content into a scenario. For example:

Scenario: Formatting successful outcome to ISO20222 response

Given incoming payment request MSGID000002 was fully accepted

And the transaction timestamp was 2020-07-28T10:33:56

When the payment status is generated with ID STAT20200728103356001

Then ISO20222 payment report should be

"""

STAT20200728103356001

2020-07-28T10:33:56

MSGID000002

pain.001

ACTC

"""Apart from the obvious benefits of reducing complexity and improving the maintainability of feature files (and the underlying software system), influencing the design in this way also allows us to handle multiple payment message formats easily. ISO20222 is the current standard, but standards change, and there are other competing standards for other types of payments (such as SWIFT). Decoupling transaction processing and settlement from a specific format makes it possible to handle format changes easily, and to support alternative formats. We can build a different parser and formatter module, and plug it into the transaction settlement service.

Handling dynamic values when you control the model

When you can influence system design, dealing with dynamically changing values becomes easy – just model it in. Instead of working with system clock, create a business clock and use it both to process actual messages, and to pre-define the timestamps during testing. Create a transaction identifier service, and use it both to generate unique identifiers in real work, and to pre-set message IDs during testing.

Some people like to mock out the random number generator, so they can get repeatable unique values from GUID generators. I prefer to model the higher level service, and specify the outcomes more directly. For example, we could set the system time and the identifier sequence as prerequisites to the formatting scenario:

Scenario: Formatting successful outcome to ISO20222 response

Given the next transaction identifier is STAT20200728103356001

And the current time is 2020-07-28T10:33:56

And an incoming payment request MSGID000002 was fully accepted

When the payment status is generated

Then ISO20222 payment report should be

"""

STAT20200728103356001

2020-07-28T10:33:56

MSGID000002

pain.001

ACTC

"""Applying SRP when you can’t influence software design

In situations when you can’t adjust the model, you have to adjust the approach to writing test cases. David Wardlaw posted a response to the challenge taking a very similar approach to Ken. He divides the responsibility into three areas – validating incoming message, processing payments and formatting results. But the middle part of his solution is not trying to influence the processing model. Instead of using a separate business object, it is specifying only the most important elements of the ISO message.

Scenario: Transactions in payments

Given an account EE382200221020145685 has balance of 100.00 EUR

When the system receives the payment message with the following initiating party values

| MsgID | CreditDateTime | Payment| InitiatingPArty|

| MSGID000002| 2020-07-28T10:33:56| 22.00 | Tom Nook |

And the payment message has the following Payment information

| PaymentMethod| PaymentAmount|Payment Date| AccountIBAN | FinancialInstitutionIDBIC| AccountIBAN |

| TRF | 22.00 |2020-07-28 | AT611904300234573201 | ABAGATWWXXX | EE382200221020145685|

Then the following values are returned in the payment report status

| MsgID | CreditDateTime | OriginalMessageID|

| STAT20200728103356001 | 2020-07-28T10:33:56 | MSGID000002 |This is quite an interesting solution for situations when you can’t really influence the design.

About 10 years ago I worked with one of the largest UK hedge funds on migrating to a third-party trading platform. The fund developed additional modules and rules to plug into the framework, but the message formats and domain object structure were already set by the platform. The whole point of the migration was to use the third-party system for most of the work, so building and maintaining a separate translation layer between the platform and the custom company model would make no sense. We wrote most of the tests the way David proposes, and it was quite effective. For each major message type, we had templates stored next to the test files, so business users could easily inspect and edit them. The individual tests would load up the correct template, overwrite the fields specified in the test, and send the message onwards.

In Domain Driven Design, Eric Evans calls these contexts “conformist”. Such an approach can be appropriate when there is a dominant external standard or a strong dependency. By following the external model, you usually lose flexibility. But when the dependency is so strong that you do not use the additional flexibility anyway, you can gain speed by just conforming to it. If business users already “speak” ISO20222, forcing them to translate work into something else you invent is a waste of effort.

With conformist contexts, it’s typically useful to somehow relate the set-up or action part of the test to a prepared message template. In the previous example, the step “When the system receives the payment message” would use the “payment message” part to find the correct template (most likely payment-message.xml). This would make it easy to add additional templates such as transfer-message.xml and load them using steps such as “When the system receives the transfer message”. If you want to be more formal about it, use the background section such as in the following example:

Feature: ISO20222 payment requests

Background:

Given the following message templates

| template | file |

| credit transfer | sepa-sct.xml |

| direct debit | sepa-ddb.xml |

Scenario: single payment transfer

Given an account EE382200221020145685 has balance of 100.00 EUR

When the "credit transfer" message is received with the following payments

| CdtTrfTxInf.Amt.InstdAmt Ccy | CdtTrfTxInf.Amt.InstdAmt |

| EUR | 22.00 |

...

Scenario: multiple payment transfer

Given an account EE382200221020145685 has balance of 100.00 EUR

When the "credit transfer" message is received with the following payments

| CdtTrfTxInf.Amt.InstdAmt Ccy | CdtTrfTxInf.Amt.InstdAmt |

| EUR | -22.00 |

| EUR | 300.00 |

...

This approach is particularly effective when you provide easy access to the message templates from the test results or your living documentation system, so business users can quickly load up sepa-sct.xml and see what it actually contains.

When working with a template and partial overrides, it’s often useful to see the full message after execution for troubleshooting purposes. Various Given-When-Then tools enable this but their approaches differ slightly. With Specflow+, you can just output troubleshooting information to the system console (Console.Write()), and you will see it in the results next to the related scenario steps. On a related note, you can use this trick together with customised reports to include screenshots, draw charts and more. Check out the SpecFlow Customising Reports Tutorial for more information.

Handling dynamic values in conformist scenarios

If you cannot influence system design, abstracting away unique value generators and timestamps might not be possible, but there are several good ways how you can minimise that problem:

- Try handling variable message elements in step definitions. For example, do not compare messages as strings directly, but implement message comparison so that it ignores timestamps. For identifiers, you could check that the incoming and outgoing identifiers match regardless of what specific value they have.

- If you are unable to contain variable elements inside step definitions, Ken Pugh suggested using a pre-processor to automatically generate more complex feature files from simpler templates.

- Another option to deal with changing values, especially if they come from external dependencies, is to use a Simplicator for test automation. Simplicators make it easier to fake a third-party system from the point of view of the tests. In this case you can use them to add or remove dynamic fields on the fly, so feature files do not have to deal with them. This would be similar to implementing Ken Pugh’s preprocessor, but it would work in test automation instead of modifying feature files. For more information on Simplicators, also check out the excellent Growing Object Oriented Software book.

Dealing with complex workflows

A common complaint against using SRP for test design is that some things will fall through the cracks. The usual concern is that each single responsibility might be well tested, but the overall big picture will be lost. In principle, there’s no reason for this to happen as long as you treat the overall flow coordination as a separate responsibility (which is why it was listed in the list from the first section).

Using the typical test terminology, the fact that we have a bunch of unit tests doesn’t remove the need for an integrated system test, but it does significantly reduce the risk which the system test needs to cover. If we know that individual pieces of a flow work well, we can focus the integrated test on proving that the pieces are connected correctly. To do so, I like to introduce a few simple examples showing the overall flow, in a separate feature file. They should not try to prove all the difficult boundaries of individual steps, but instead they should deal with the edge cases for the flow itself. From Lee Copeland’s seven potential perspectives of test coverage, this overall feature file should only deal with the last one, the paths through the system.

Fin Kingma provided a nice example:

I usually create functional sentences that describe the most important values of the json/xml message, and handle the message creation in the implementation behind. For instance:

Given 'John' has a balance of '100' on his account

When the system receives a payment message with:

| sum | id | account |

| 22 | 1234 | John |

Then the balance on 'john' account should be '122.00' EUR

And a payment status report should be generated for message '1234'Fin’s example shows the initial balance, the most important message fields coming in, and the most important aspects of the outcome. It’s great as an overall proof that the various messaging components are connected correctly, and it would nicely complement more specific feature files that document how individual transaction processing rules work.

Feature files on this level could use a specific message format, similar to what David proposed, or just abstract it away as in Fin’s example. Fin explains his approach:

In the step definition, I hide the logic in a

paymentMessageService, on how to create a payment message with default data + the provided data. This way, even your step definitions look clean, and all yourpaymentMessagecreation logic is moved to an appropriate service.

Example project

The project demonstrates the key automation aspects and the how to implement the following ideas:

- Use the concept business time, provided by a domain service, to control timestamps and make tests repeatable

- Use the idea of automated unique identifier service to avoid testing for random numbers or complex GUIDs

- Simplify the manipulation of complex data formats by using templated messages, and listing only important values in for a specific test case in feature files

- Print out the full message (with replacements applied to a template) to test logs, so they can be audited in the reports.

Learn more about the example project or check out the code on GitHub.